End-to-end-ish tests using fake HTTP in Flutter

We write tests in order to prove our features work as intended and we run those tests consistently to prove that our features don't stop working as intended.

Writing end-to-end tests is pretty expensive. Typically, they use real devices or sometimes a simulator/emulator and real backend services. That usually means that they end up being pretty slow and they tend to be somewhat flaky. That isn't to say that they're not worth it for some teams or for a subset of the features in your app. However, I'm here to tell you (or maybe just remind you) that tests and test coverage aren't the goal in and of themselves. We write tests in order to prove our features work as intended and we run those tests consistently to prove that our features don't stop working as intended.

That means that our goal when writing tests should be to figure out how to achieve our target level of confidence that our features work as intended as affordably as possible. It’s a spectrum. On the one end is 100% test coverage using all the different kinds of tests: solitary unit tests, sociable more-integrated tests, and end-to-end tests; all features, fully covered, no exceptions. On the other end of the spectrum there are no tests at all; YOLO, just ship-it. At Betterment, we definitely prefer to be closer to the 100% coverage end of the spectrum, but we know that in practice that's not really a feasible end state if we want to ship changes quickly and deliver rapid feedback to our engineers about their proposed changes.

So what do we do? Well, we aim to find an affordable, maintainable spot on that testing spectrum a la Justin Searls' advice. We focus on writing expressive, fast, and reliable solitary unit tests, some sociable integrated tests of related units, and some "end-to-end-ish" tests. It's that last bucket of tests that's the most interesting and it's what the rest of this post will focus on.

What are "end-to-end-ish" tests?

They're an answer to the question "how can we approximate end-to-end tests for a fraction of the cost?"

In Flutter, the way to write end-to-end tests is with flutter_driver and the integration_test package. These tests use the same widgetTester API that regular Widget tests use but they are designed to run on a simulator, emulator, or preferably a real device. These tests are pretty easy to write (just as easy as regular widget tests) but hard-ish to debug and very slow to run. Where a widget test will run in a fraction of a second to a second, one of these integration tests will take many seconds.

We love the idea of these tests, the level of confidence they'd give us that our app works as intended, and how they'd eliminate manual QA testing, but we loathe the cost of running them, both in terms of time and actual $$$ of CI execution.

So, we decided that we really only want to write these flutter_driver end-to-end tests for a tiny subset of our features, almost like a "smoke testing" suite that would signal us if something was seriously wrong with our app. That might include a single happy-path test apiece for features like log-in and sign-up. But that leaves us with a pretty large gap where it's way too easy for us to accidentally create a feature that depends on some Provider that's not provided and our app blows up at runtime in a user's hands. Yuck!

Enter, end-to-end-ish tests (patent pending 😉). These tests are as close to end-to-end tests as we can get without actually running on a real device using flutter_driver. They look just like widget tests (because they are just widget tests) but they boot up our whole app, run all the real initialization code, and rely on all our real injected dependencies with a few key exceptions (more on that next). This gives us the confidence that all our code is configured properly, all our dependencies are provided, our navigation works, and the user can tap on whatever and see what they'd expect to see. You can read more about this approach here.

"With a few key exceptions"

If the first important distinction of end-to-end-ish tests is that they don't run on a real device with flutter_driver, the second important distinction is that they don't rely on a real backend API. That is, most apps rely on one (or sometimes a few) backend APIs, typically powered by HTTP. Our app is one of those apps. So, the second major difference is that we inject a fake HTTP configuration into our network stack so that we can run nearly all of our code for real but cut out the other unreliable and costly dependency.

The last important hurdle is native plugins. Because widget tests aren't typically run on a real device or a simulator/emulator, they run in a context in which we should assume the underlying platform doesn't support using real plugins. This means that we have to also inject fake implementations of any plugins we use. What I mean by fake plugins is really simple. When we set up a new plugin and we wrap it in a class that we inject into our app. Making a fake implementation of that plugin is typically as easy as making another class, prefixing its name with Fake and having it implement the public contract of the regular plugin class with suitably real but not quite real behavior. It's a standard test double, and it does the trick.

It's definitely a bummer that we can't exercise that real plugin code, but when you think about it, that plugin code is tested in the plugin's test suite. A lot of the time, the plugin code is also integration tested as well because the benefits outweigh the costs for many plugins, e.g. the shared preferences plugin can use a single integration test to provide certainty that it works as intended. Ultimately, using fake plugins works well and makes this a satisfyingly functional testing solution.

About that fake HTTP thing

One of the most interesting bits of this solution is the way we inject a fake HTTP configuration into our network stack.

Before building anything ourselves, we did some research to figure out what the community had already done. Unfortunately, our google-fu was bad and we didn't find anything until after we went and implemented something ourselves. Points for trying though, right? Eventually, we found nock. It's similar to libraries for other platforms that allow you to define fake responses for HTTP requests using a nice API and then inject those fake responses into your HTTP client. It relies on the dart:io HttpOverrides feature. It actually configures the current Zone's HTTP client builder to return its special client so that any code in your project that finds its way to using the dart:io HTTP client to make a request will end up routed right into the fake responses. It's clever and great. I highly recommend using it. We, however, are not using it.

How we wrote our own fake HTTP Client Adapter

As I said, we didn't find nock until after we wrote our own solution. Fortunately, it was a fun experience and it really took very little time! This also meant that we ended up with an API that fit our exact needs rather than having to reframe our approach to fit what nock was able to offer us. The solution we came up with is called charlatan and it's open-source and available on pub.dev. Both libraries are great and each is designed for a specific challenge, check both of them out and decide which one works for your needs.

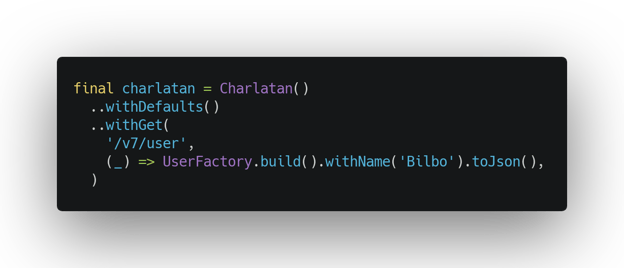

Here's what our API looks like and how we use it to set up a fake HTTP client for our tests.

Here you can see how to construct an instance of the

Here you can see how to construct an instance of the Charlatan class and then use its methods like whenGet to configure it with fake responses that we want to see when we make requests to the configured URLs. We've also created an extension method withDefaults that allows us to configure a bunch of common, default responses so that we don't have to specify those in each and every test case. This is useful for API calls that always behave the same way, like POSTs that return no body, and to provide a working foundation of responses. When a test case cares about the specifics of a response, it can override that default.

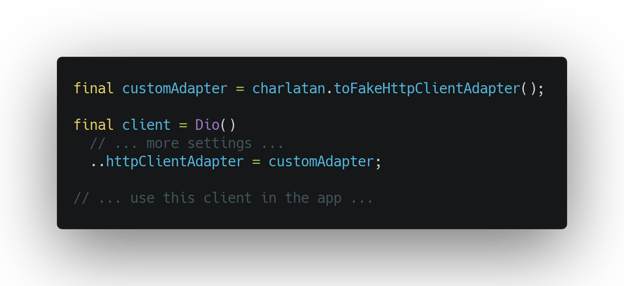

The last important step is to make sure to convert the Charlatan instance into an adapter and pass that into our HTTP client so that the client will use it to fulfill requests.

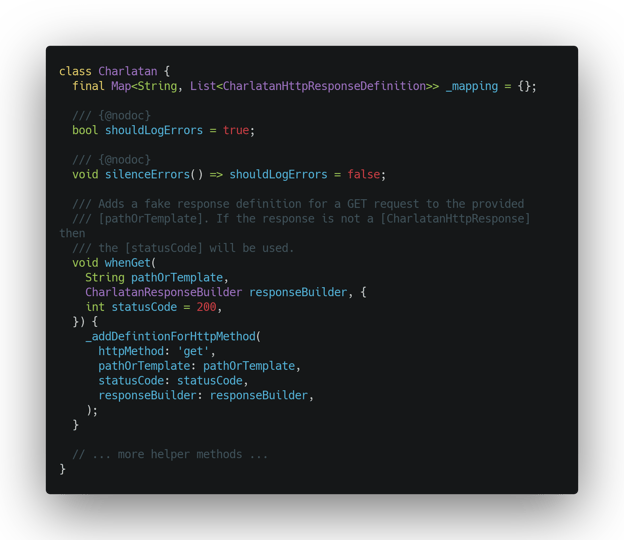

Here's a peek inside of the Charlatan API.

Here's a peek inside of the Charlatan API.  It's just collecting fake responses and organizing them so that they're easy to access later.

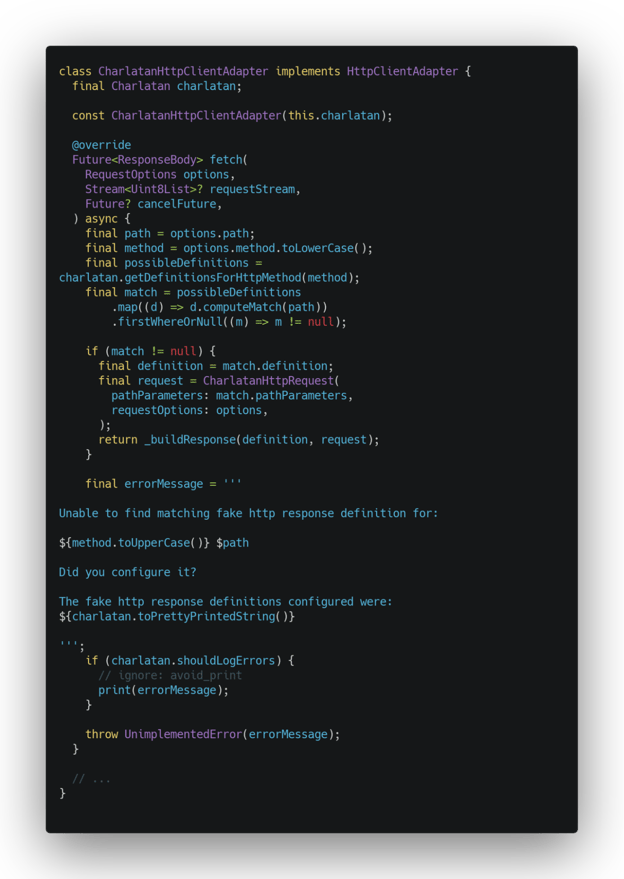

It's just collecting fake responses and organizing them so that they're easy to access later. As you can see, the internals are pretty tiny. We provide a class that exposes the developer-friendly configuration API for fake responses, and we implement the HttpClientAdapter interface provided by dio. In our app we use

As you can see, the internals are pretty tiny. We provide a class that exposes the developer-friendly configuration API for fake responses, and we implement the HttpClientAdapter interface provided by dio. In our app we use dio and not dart:io's built-in HTTP client mostly due to preference and slight feature set differences. For the most part, the code collects fake responses and then smartly spits them back out when requested.

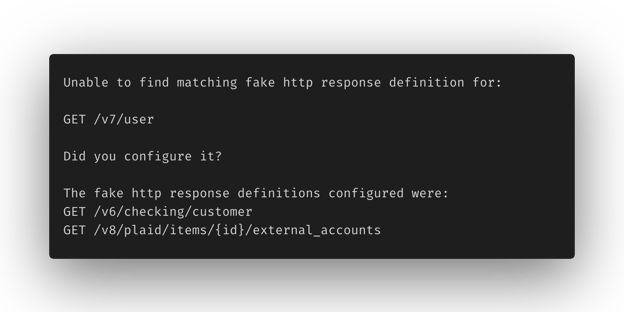

The key functionality (Ahem! Magic ✨) is only a few lines of code. We use the uri package to support matching templated URLs rather than requiring developers to pass in exactly matching strings for requests their tests will make. We store fake responses with a URI template, a status code, and a body. If we find a match, we return it, if we don't then we throw a helpful exception to guide the developer on how to fix the issue.

Takeaways

Testing software is important, but it's not trivial to write a balanced test suite for your app's needs. Sometimes, it's a good idea to think outside the box in order to strike the right balance of test coverage, confidence, and maintainability. That's what we do here at Betterment, come join us!